What a folding ruler can tell us about neural networks

Researchers at the University of Basel have developed mechanical models that can predict how effectively the different layers of a deep neural network transform data. Their results improve our understanding of these complex systems and suggest better strategies for training neural networks.

10 July 2025 | Oliver Morsch

Deep neural networks are at the heart of artificial intelligence, ranging from pattern recognition to large language and reasoning models like ChatGPT. The principle: during a training phase, the parameters of the network’s artificial neurons are optimized in such a way that they can carry out specific tasks, such as autonomously discovering objects or characteristic features in images.

How exactly this works, and why some neural networks are more powerful than others, isn’t easy to understand. A rigorous mathematical description seems out of reach of current techniques. However, such an understanding is important if one wants to build artificial intelligence whilst minimizing resources.

A team of researchers led by Prof. Dr. Ivan Dokmanić at the Department for Mathematics and Computer Science of the University of Basel have now developed a surprisingly simple model that reproduces the main features of deep neural networks and that allows one to optimize their parameters. They recently published their results in the scientific journal “Physical Review Letters”.

Division of labour in a neural network

Deep neural networks consist of several layers of neurons. When learning to classify objects in images, the network approaches the answer layer by layer. This gradual approach, during which two classes – for instance, “cat” and “dog” - are more and more clearly distinguished, is called data separation. “Usually each layer in a well-performing network contributes equally to the data separation, but sometimes most of the work is done by deeper or shallower layers”, says Dokmanić.

This depends, among other things, on how the network is constructed: do the neurons simply multiply incoming data with a particular factor, which experts would call “linear”? Or do they carry out more complex calculations - in other words, is the network is “nonlinear”? A further consideration: in most cases, the training phase of neural networks also contains an element of randomness or noise. For instance, in each training round a random subset of neurons can simply be ignored regardless of their input. Strangely, this noise can improve the performance of the network.

“The interplay between nonlinearity and noise results in very complex behaviour which is challenging to understand and predict”, says Dokmanić. “Then again, we know that an equalized distribution of data separation between the layers increases the performance of networks”. So, to be able to make some progress, Dokmanić and his collaborators took inspiration from physical theories and developed macroscopic mechanical models of the learning process which can be intuitively understood.

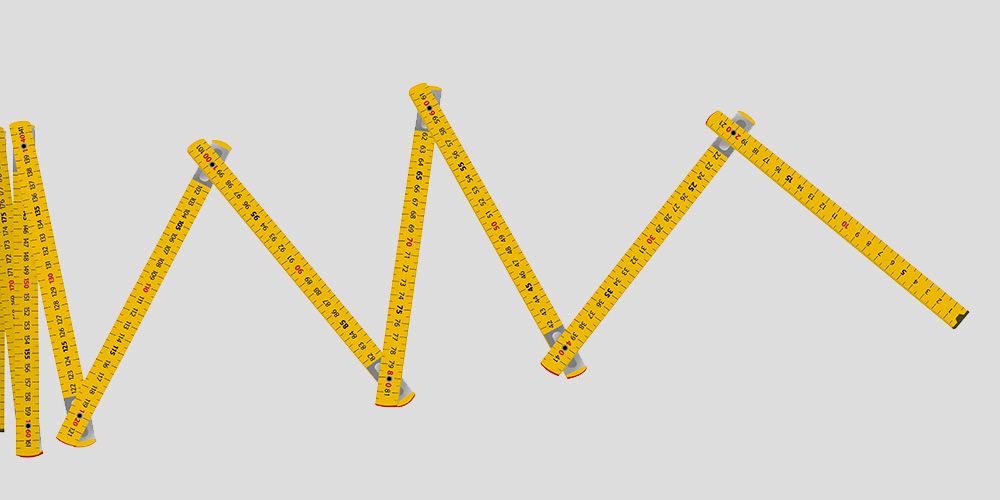

Pulling and shaking the folding ruler

One such model is a folding ruler whose individual sections correspond to the layers of the neural network and that is pulled open at one end. In this case, the nonlinearity comes from the mechanical friction between the sections. Noise can be added by erratically shaking the end of the folding ruler while pulling.

The result of this simple experiment: if one pulls the ruler slowly and steadily, the first sections unfold while the rest remains largely closed. “This corresponds to a neural network in which the data separation happens mainly in the shallow layers”, explains Cheng Shi, a PhD student in Dokmanić’s group and first author of the study. Conversely, if one pulls fast while shaking it a little bit, the folding ruler ends up nicely and evenly unfolded. In a network, this would be a uniform data separation.

“We have simulated and mathematically analysed similar models with blocks connected by springs, and the agreement between the results and those of ‘real’ networks is almost uncanny”, says Shi. The Basel researchers are planning to apply their method to large language models soon. In general, such mechanical models could be used in the future to improve the training of high-performance deep neural networks without the trial-and-error approach that is traditionally used to determine optimal values of parameters like noise and nonlinearity.

Original publication

Cheng Shi, Liming Pan, Ivan Dokmanić

Spring-block theory of feature learning in deep neural networks.

Physical Review Letters (2025), doi: 10.1103/ys4n-2tj3